Surya Pratap Singh

Research Engineer at UMich

About Me

I am a Research Engineer at the Computational Autonomy and Robotics (CURLY) Lab at the University of Michigan (UMich), working at the intersection of robotics, machine learning, and computer vision. My current research, in collaboration with Professor Maani Ghaffari, focuses on SLAM, State Estimation, 3D Vision, and Perception. I currently lead the development of the autonomy stack for a marine surface vehicle.

Previously, as a graduate student in the Robotics Department at UMich, I was a research assistant at the Ford Center for Autonomous Vehicles (FCAV) Lab, working with Professor Ram Vasudevan and Dr. Elena Shrestha. My research explored model-based reinforcement learning for robot control and multimodal perception for scene understanding. As a graduate student, I also gained significant exposure to motion planning and embedded systems.

Before joining UMich, I interned at the Biorobotics Lab at Carnegie Mellon University (CMU), where I collaborated with Dr. Howie Choset, Dr. Matthew Travers, and industry partners from Apple on an electronic-waste recycling project. This experience deepened both my technical expertise and my ability to communicate complex ideas while working closely with industry stakeholders.

I hold a bachelor's degree in Mechanical Engineering with a minor in Robotics and Automation from the Birla Institute of Technology & Science (BITS Pilani), where I developed a strong foundation in classical control of robotic manipulators and learning-based control of UAVs under the mentorship of Professor Arshad Javed.

Beyond robotics, I am passionate about applying machine learning and computer vision to diverse fields such as healthcare, and 3D scene reconstruction. I aim to leverage my interdisciplinary expertise to solve complex challenges across various industries.

Experience

|

Research Engineer

September 2024 - Present, University of Michigan, Ann Arbor, MI, US |

|

Perception & Machine Learning Research Assistant

June 2023 - July 2024, University of Michigan, Ann Arbor, MI, US |

|

Reinforcement Learning Research Intern

May 2021 - May 2022, Carnegie Mellon University, Pittsburgh, PA, US |

|

Motion Planning Intern (Software)

May 2019 - Jul 2019, Indira Gandhi Centre for Atomic Research, Kalpakkam, TN, India |

Highlight Projects

Autonomous Surface Vehicle Key Skills & Technologies: Autonomy, Machine Learning / Deep Learning, 3D Semantic Mapping, Convolutional Bayesian Kernel Inference, LiDAR Point Cloud Processing, Object Detection & Segmentation, Grounded SAM2, 3D-to-2D Projection, DROID-SLAM, Invariant Extended Kalman Filter, DRIFT, IMU-based Preintegration, GPS-based Correction, Ardupilot, MAVLink, PyTorch, ROS2, C++, Python DescriptionA full-stack autonomy system is being developed for a marine surface vehicle. ROS2-to-MAVLink communication enables seamless interaction between software and hardware, while an IMU, GPS, LiDAR, and Stereo RGB Camera are integrated with the ArduPilot framework. For localization, DROID-SLAM with SAM2 (to mask water) initially achieved ~1.5m error, later reduced to < 20 cm using the DRIFT algorithm with IMU preintegration and GPS correction. To construct a 3D semantic map, Convolutional Bayesian Kernel Inference was applied to LiDAR point clouds, with labels generated by projecting points onto Grounded SAM2-generated 2D segmentation masks. |

Multimodal Perception for Autonomous Racing [Code (Coming Soon)] [Video] Key Skills & Technologies: Multimodal Scene Understanding, Machine Learning / Deep Learning, Image Segmentation, UNet, Variational Autoencoder (VAE), Mixture of Softmaxes, Online & Offline RL, TD-MPC, DreamerV3, ROS, ROS Nodelet, Docker, PyTorch, Python DescriptionA robust multi-terrain control policy for autonomous racing was developed using LiDAR and RGB camera inputs via reinforcement learning. To enhance visual sim-to-real transfer, a lightweight UNet model segmented key features—floor, opponent rover, walls, and background—achieving a 0.99 Dice score. ROS nodelet optimization and ROS-Docker communication improvements increased update rates from 10 Hz to 30 Hz, enabling the rover to reach speeds of 2.5 m/s. Self-supervised sensor fusion using a Mixture of Softmaxes (MoS) effectively combined LiDAR and RGB data, leading to 60% head-to-head race wins against a dynamic rule-based gap-follower agent. Finally, offline TD-MPC reinforcement learning delivered strong policies in under 15 episodes with only 5K-10K transitions, significantly reducing sample complexity compared to over 300K episodes required for online training. |

Electronic-waste Recycling Key Skills & Technologies: 6-DoF Parallel Manipulator, MuJoCo, Robosuite, Numerical Optimization, Forward Kinematics, PD Controller, Singularity Avoidance, Multi-Object Tracking, Classical Computer Vision, OpenCV, SORT Tracker, Machine Learning / Deep Learning, DDPG, Hindsight Experience Replay (HER), PyTorch, Python DescriptionCollaborating with clients from Apple, developed an object-agnostic gripping strategy for E-waste manipulation at high speeds and accuracy. Co-led the simulation team to integrate a 6-DoF parallel manipulator with MuJoCo, using a waypoint-following PD controller, achieving joint value errors under 8 mm (lin.) and 0.1 radians (rot.). Created a numerical optimization-based forward kinematics approach with maximum errors of 5 mm (pos.) and 0.75 radians (orient.). Built a pseudo-Jacobian matrix for singularity avoidance. Developed a multi-object tracking module using classical computer vision techniques with OpenCV and the SORT tracker. Implemented a DDPG + HER-based Deep RL algorithm in MuJoCo-powered Robosuite, reaching a 95.55% success rate at conveyor speeds up to 0.5 m/s. |

MBot Autonomy: Control, Perception, and Navigation Key Skills & Technologies: Autonomous Navigation, Lightweight Communications and Marshalling (LCM), Multi-Hierarchical Motion Controller, SLAM, Monte Carlo Localization, Dead reckoning, Gyroscope + Wheel encoder-based Odometry, 2D Occupancy Grid Mapping, A* Path Planning, Frontier Exploration Offline Reinforcement Learning, Sensor Fusion, Docker, UNet Model Development, Python, C DescriptionA full-stack robotic system was developed for autonomous warehouse navigation using an MBot platform. A multi-hierarchical motion controller achieved a 2.5 cm RMS trajectory error with velocity errors of 0.025 m/s (linear) and 0.045 rad/s (angular). SLAM, with 2D occupancy grid mapping and Monte Carlo localization, yielded a 6.63 cm RMS localization error relative to gyrodometry. An A* path planner with collision avoidance and frontier exploration enabled 100% success in optimal path-finding, consistently returning within 5 cm of the home position. |

Autonomous Robotic Arm: Vision-Guided Manipulation Key Skills & Technologies: Manipulation, Computer Vision, GUI Development, PID Controller, Forward & Inverse Kinematics, RGB-D Camera, Camera Calibration, AprilTags, Object Detection & Sorting, OpenCV, ROS2, Python DescriptionA GUI-based control and vision-guided autonomy system was developed for the RX200 arm. The arm and an RGB-D camera were integrated into the ROS2 framework, with a PID controller achieving sub-2 cm end-effector positioning error in block swapping. Automatic camera calibration using AprilTags, along with forward and inverse kinematics, enabled 100% success in click-to-grab/drop tasks within a 35 cm radius. OpenCV-based algorithms for block color (VIBGYOR) and size detection ensured 100% accuracy in sorting. |

SelfDriveSuite: Vehicle Control and Scene Understanding [Code] Key Skills & Technologies: Adaptive Cruise Control, Model Predictive Control, Optimization (Quadratic Programming), Image Classification, Semantic Segmentation, UNet, Machine Learning / Deep Learning, PyTorch, Python DescriptionThis project developed autonomous driving algorithms, integrating adaptive cruise control, model predictive control (MPC), and robust scene understanding. A QP-based minimum-norm controller maintained a safe following distance of 1.8x velocity. Linear and nonlinear MPCs achieved precise path tracking with RMS errors of < 1 m (position), < 0.5 rad (orientation), < 0.5 m/s (velocity), and < 1 m/s² (acceleration). For scene understanding, a 2D image classifier reached 90% accuracy on blurry images, while a 15-class UNet-based segmentation model, trained in varied weather conditions, achieved 81.2% mIoU. |

|

OriCon3D: Monocular 3D Object Detection Key Skills & Technologies: 3D Object Detection, Machine Learning / Deep Learning, YOLO, MobileNet-v2, EfficientNet-v2, KITTI Dataset, PyTorch, Python DescriptionThis project developed a lightweight 3D object detection architecture for real-time autonomous driving using the KITTI dataset. A pre-trained YOLO model with MultiBin regression for 3D bounding box orientation achieved 3D IoU scores of 76.9 (Cars), 67.76 (Pedestrians), and 66.5 (Cyclists). Integrating efficient feature extractors like MobileNet-v2 and EfficientNet-v2 reduced inference time by over 80% and improved 3D IoU by 2.4% (Cars), 1.5% (Pedestrians), and 4.5% (Cyclists), outperforming conventional methods. |

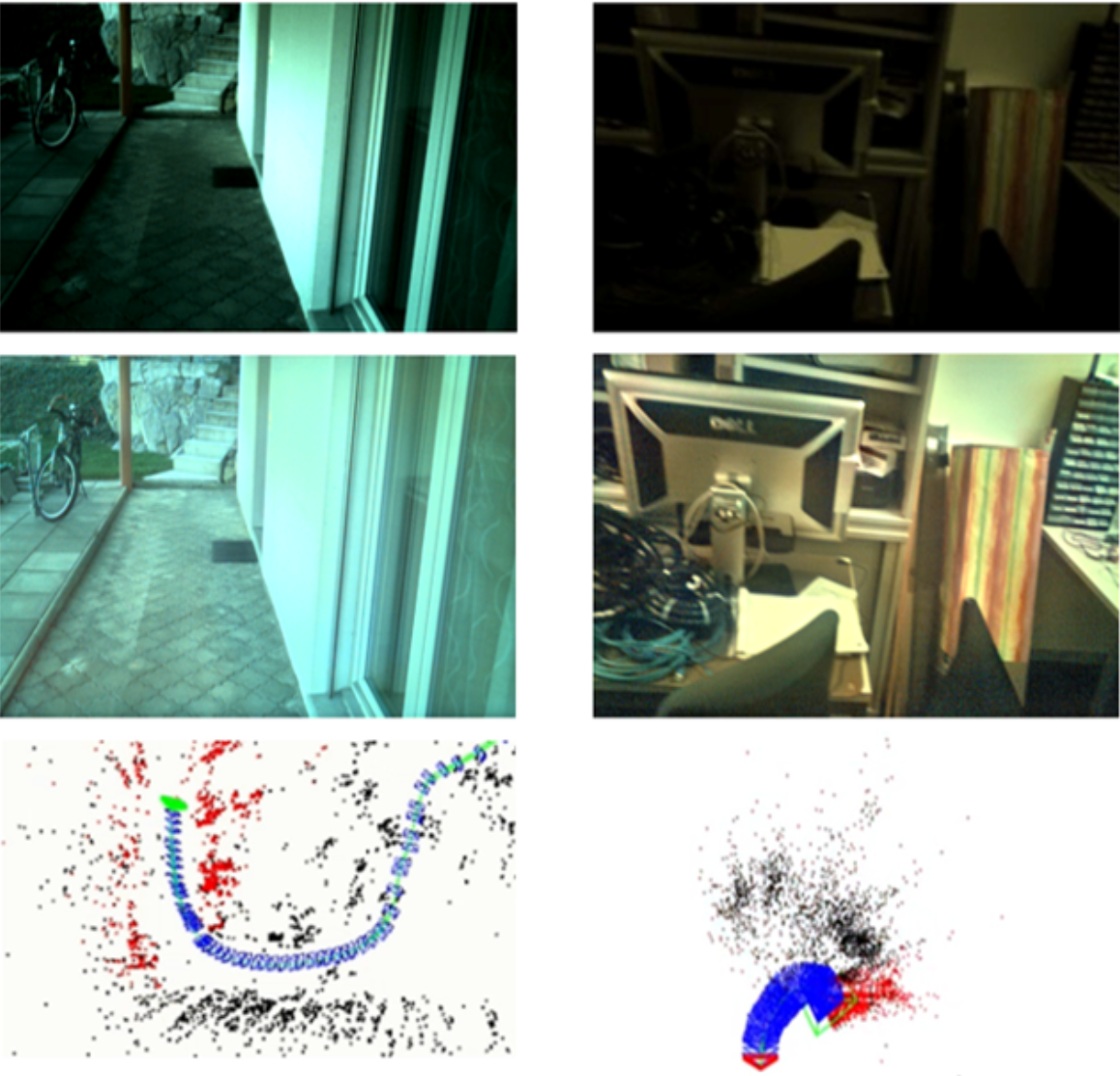

Twilight SLAM: Navigating Low-Light Environments Key Skills & Technologies: Low-Light SLAM, ORB-SLAM3, SuperPoint-SLAM, Feature Extraction, Image Enhancement, Model Optimization, ONNX, ONNXRuntime, C++, Python DescriptionEnhanced Visual SLAM accuracy by integrating image enhancement models (Bread, Dual, EnlightenGAN, Zero-DCE) into ORB-SLAM3 and SuperPoint-SLAM. This increased feature extraction by 15% across varied lighting conditions and improved localization with an 18% RMSE reduction between SLAM-generated and ground-truth poses. ORB-SLAM3 outperformed SuperPoint-SLAM, with EnlightenGAN reducing maximum absolute pose error by 13% in low-light scenarios. For real-time performance, EnlightenGAN's ONNX model was built, achieving a 3x speed boost using ONNXRuntime. |

Autonomous UAV-based Search and Rescue Key Skills & Technologies: Q-learning, Function Approximation, MATLAB, System Identification Toolbox, PID tuning, Gazebo, ROS, Python DescriptionTo enhance autonomous UAV navigation in search-and-rescue (SaR) missions, the project focused on using reinforcement learning to locate and track victims until the rescue team arrives. An urban scenario was simulated in Gazebo with the AR.Drone, and a position controller was fine-tuned using MATLAB’s System Identification Toolbox, achieving less than 1 cm position error. Q-learning with function approximation reduced training time by 50% and addressed large state spaces. YOLO combined with Optical Flow was also used for real-time target tracking, showcasing effective performance in dynamic SaR environments. |

Publications

Autonomous UAV-based Target Search, Tracking and Following using Reinforcement Learning and

YoloFLOW

IEEE Robotics and Automation Letters-2020

Ajmera, Y., Singh, S.

[

Video

]

[

PDF

]

We developed a UAV-based system for search and rescue missions, integrating reinforcement learning for autonomous navigation and YOLO with Optical Flow for real-time target tracking. This approach enables the UAV to find and follow victims in cluttered environments, ensuring their locations are continually updated for swift evacuation. Extensive simulations demonstrate the system's effectiveness in urban search and rescue scenarios. |

Twilight SLAM: Navigating Low-Light Environments

arXiv:2304.11310-2023

Singh, S., Mazotti, B., Rajani, DM., Mayilvahanan, S., Li, G., Ghaffari M.

[

Video

]

[

PDF

]

This work presents a detailed examination of lowlight visual Simultaneous Localization and Mapping (SLAM) pipelines, focusing on the integration of state-of-the-art (SOTA) low-light image enhancement algorithms with standard and contemporary SLAM frameworks. The primary objective of our work is to address a pivotal question: Does illuminating visual input significantly improve localization accuracy in both semidark and dark environments? |

OriCon3D: Effective 3D Object Detection using Orientation and Confidence

arXiv:2304.11310-2023

Rajani, DM., Singh, S., Swayampakula, RK.

|

[

PDF

]

In this work, we propose a simple yet very effective methodology for the detection of 3D objects and precise estimation of their spatial positions from a single image. Unlike conventional frameworks that rely solely on center-point and dimension predictions, our research leverages a deep convolutional neural network-based 3D object weighted orientation regression paradigm. These estimates are then seamlessly integrated with geometric constraints obtained from a 2D bounding box, resulting in derivation of a comprehensive 3D bounding box. |

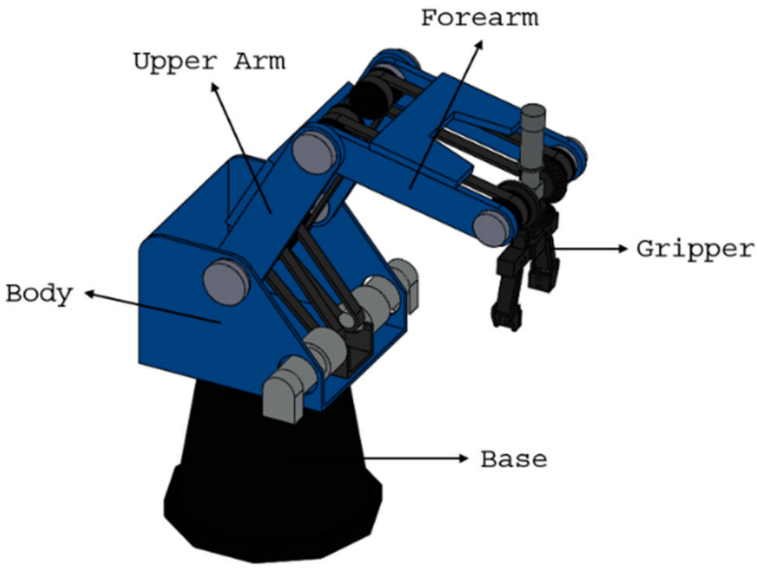

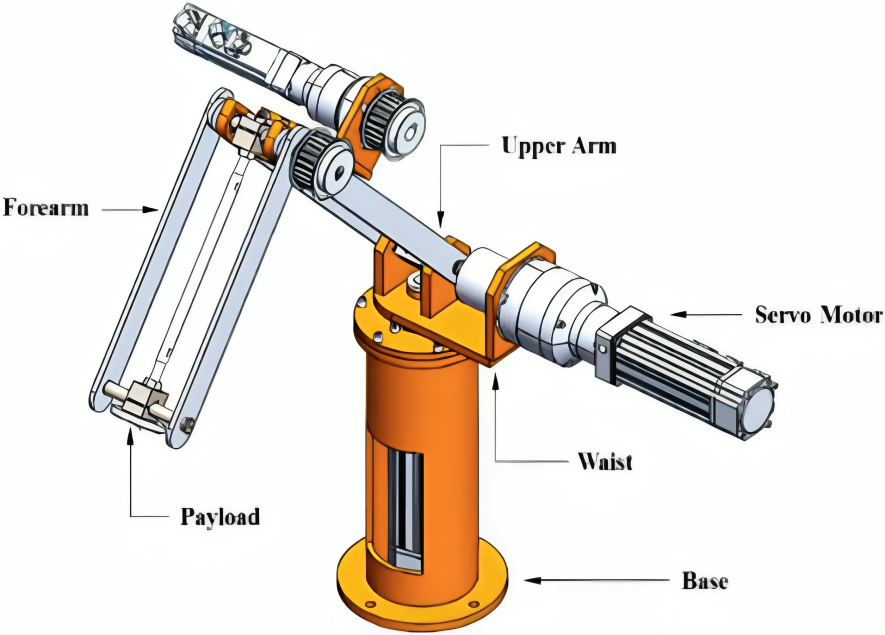

Energy Efficiency Enhancement of SCORBOT ER-4U Manipulator Using Topology Optimization Method

Mechanics Based Design of Structures and Machines-2021

Srinivas, L., Aadityaa, J., Singh, S., Javed, A

[

PDF

]

In this work, topology optimization of the upper and forearm of a 6-DoF Scorbot manipulator was performed considering dynamic loading conditions. A motion study in SolidWorks led to a 30% reduction in peak stress and a 15% decrease in deflection. Additionally, MATLAB’s Lagrange-Euler model demonstrated a 40% increase in energy efficiency. |

Experimental evaluation of topologically optimized Manipulator-link using PLC and HMI based control system

International Mechanical Engineering Congress-2019

Srinivas, L., Singh, S., Javed, A

[

PDF

]

In this work, a universal test setup for a 1-DoF manipulator link was developed to validate Von Mises stress values under static loading conditions. Strain data captured with LabVIEW and DAQ showed stress measurements within 1.27% of MATLAB simulations. Dynamic stress analysis of a 3-DoF TRR manipulator in MSC Adams achieved a mean error under 2% compared to simulations. |

Education

|

University of Michigan, US

Aug 2022 - May 2024, Master of Science in Robotics |

|

Birla Institute of Technology & Science Pilani, Hyderabad, India

Aug 2017 - Jun 2021, Bachelor of Engineering in Mechanical |